artificer - Building docker images without docker

May 28, 2018

Introduction

At JustWatch we run almost all our workloads in Kubernetes. There are a few exceptions but we always try to reduce the number of services not running in Kubernetes. One such exception used to be our Continuous Integration nodes. We run mostly static Go binaries for our backend services. These are packaged into Docker images as the unit of deployment. Of course we could run them outside of Docker as well, but packaging them up into Docker containers provides a handy unit that can also include any kind of necessary assets and configuration while providing a certain level of isolation against other containers. This all fits in nicely with the existing Docker and Kubernetes ecosystem including hosted Container Registries and managed Kubernetes clusters.

So far we have built these Go binaries in dedicated Docker containers and created minimal Docker images using multi-stage Dockerfiles. This process has the advantage of providing very high isolation and the guarantee that these builds are reproducible.

The disadvantages are that we needed Docker and couldn’t benefit from the much improved build and test caching in recent Go releases.

Starting from Scratch

Since we had some spare resources in our Kubernetes Clusters and the old builder VMs were harder to scale, we looked into how we could move most of our builds into Kubernetes.

In this process we looked at most of the existing options, like Google Cloud Container Builder, img, FTL, Bazel and most recently kaniko.

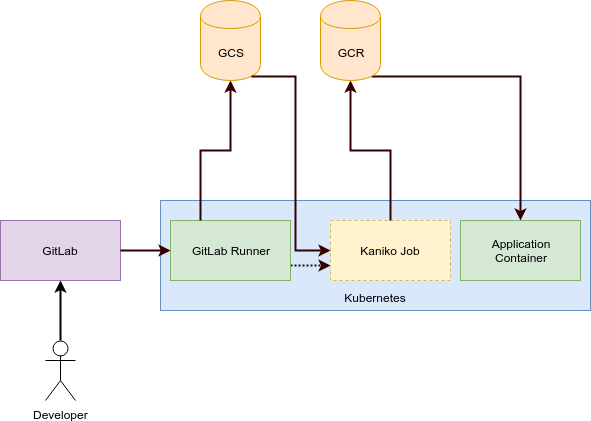

We were thrilled by kaniko since it’s almost exactly what we were looking for. It works by uploading your build context to Google Cloud Storage and creating a Kubernetes Job to run kaniko in an (almost) empty Container. kaniko is pretty amazing since it supports almost all Dockerfile statements.

However there is one slight drawback: It needs to move a lot of data around. First it will create the build context, upload the build context to the object store, create the Job which will download the context only to unpack it and upload it again to some docker registry (i.e. another object store).

This makes a lot of sense in the general case, but for more than 90% of our builds we don’t need most of the features in a Dockerfile.

The only statements we’re using are:

FROM- to specify a base imageADD- to add some files, at least a (static) binaryCMD- to specify the entrypoint

If you look at the definition of Docker images you’ll see that they are only some compressed tar and JSON files. That didn’t seem like something you should need a multi-million LoC client-server application with elevated privileges (Docker) for.

Our first attempt was to build a custom docker builder using the Docker/Moby libraries, but those are rather tightly coupled. So we looked at kaniko as it already almost did what we needed. In the end we didn’t use it. Instead, we took the libraries built for kaniko and used them to create our custom docker image builder.

Artificer

Our tool does not support Dockerfiles. We wanted to strictly limit its complexity to only support our core usecases.

There are only a few command line flags. This command shows all the supported flags:

artficier \

--baseimage alpine:3.7 \

--files server-binary \

--files conf/ \

--cmd /server-binary \

--target gcr.io/project/server:latest \

--env LOGLEVEL=1 \

--env ENVIRONMENT=prod

| CLI-Flag | Shorthand | Usage |

|---|---|---|

--baseimage |

-b |

The path to the base Image (FROM in Dockerfiles). The builder will pull it from a public docker registry or the Google Container Registry if provided with appropriate credentials |

--target |

-t |

The path to the destination repository where the new image gets pushed to. |

--files |

-f |

The path to a file or directory that will be included at the container root. Use this flag multiple times to specify multiple files or directories. |

--env |

-e |

An Environment Variable definition of the form VARIABLE=VALUE. Use this flag multiple times to specify multiple environment variables |

--cmd |

-c |

The command that runs automatically once the container is launched (CMD in Dockerfiles). |

The tool operates directly on the binaries built by our GitLab Runners inside Kubernetes. There is no need to create a build context, upload and download it. This saves quite some time and resources.

Wrapping up

This tool allowed us to considerably simplify and speed up our build infrastructure while saving costs at the same time:

- More CI Instances, one per Kubernetes Node

- Less cost due to fewer GitLab CI VMs

- Faster builds due to build and test caching (roughly 20-70% improvement)

- Higher productivity due to less waiting for builds

We hope you find this little project interesting and maybe helpful. In case this tool doesn’t cover your usecase you should most probably resort to kaniko or plain Docker.

If you want to learn more check out the code on GitHub.

This post was brought to you by Dominik Schulz, Head of Infrastructure, and Jannis Piek, Junior SWE.